Exposing an API as a UI vs REST endpoints has a lot of tradeoffs. What tradeoffs are we making, and more importantly, how do we get the best of both?

The way I think of an app is that it stores some data (maybe on your local machine, maybe on a remote server), and it gives you an API to manipulate that data. AFAIK that pretty much describes what every app does.

That API can manifest itself in the form of a UI. It doesn't have to, it can also be a REST API, but sometimes there's a UI component. The UI's actions have to map to the underlying API. That's why I think UI/UX is really just a form of API Design.

Where this becomes really interesting is when you start to explore what kind of tradeoffs you're making by presenting an API as UI vs a REST API. These are the two dominant ways of exposing an API, but neither of them are very good. Just by nature, the UI and REST API best practices tend to limit how fast we can interact with the API and/or how much work we can get done with it.

Pros/cons of a traditional UI

Limitations of interacting with a traditional UI:

Very slow. Moving a cursor, and figuring out where to click introduces a lot of friction.

Limited by designers. The designers decide what actions to make available to you, and the best way to interact with the API. They naturally try to make it usable by everyone, rather than tailored for you.

Cannot be automated.

Pros of interacting with a traditional UI:

Very fast to get started. You can hop on a completely new web page, and usually, within a few seconds, you kinda understand where to go and how to use it.

Visualization. Having the whole 2D space available to the app lets it show and explain the data in helpful ways. Like sliders, or Figma, or many other tools.

Rich interaction. You can communicate to the API by typing in words, or pressing buttons, or dragging sliders.

Pros/cons of a traditional REST API:

Limitations of interacting with a traditional REST API:

Need to write/understand code. This is a big one! Nobody likes writing and debugging code, and very few people can do so.

Very slow to get started. Authentication, getting API Keys, maybe even creating a new separate developer account, then read the getting started docs.

Constrained to text. You'll usually get some sort of JSON response in your black and white terminal. It's ugly, long, and overwhelming.

Poor interaction. The only way to communicate with the API is passing in the serialized data as a parameter to the endpoint.

Pros of interacting with a traditional REST API:

"Easily" automated. Easily in quotes here because it still involves writing code.

Powerful. Even simple CRUD endpoints can be composed to let you do lots of things quickly.

Can interact with other services (if they expose an API too).

These are pretty big tradeoffs! For most people, who don't interact with REST APIs, it probably feels like a traditional UI is really the only paradigm possible. For me, neither "feel" very good to use. It's probably possible to make better interactions! And certainly people have. Other interaction methods include:

Terminal "interpreter-style" concepts: lots of apps nowadays are introducing "command-line" ideas. Instead of having to click and find your way through menus, you can just write what you're looking for, or want to do. Gaby Goldberg has a great write-up about it here:

The Command Line Comeback

In consumer, everything old is new again. As products get flashier with more features and shinier buttons, we crave a return to our roots…

Type-system ideas: Paul Chiusano argues for type systems in UIs:

Paul Chiusano: Why type systems matter for UX: an example

Node-style graphs like in Unreal Blueprints or Unity Shaders or darklang. These let you build and compose visually but they can get cluttered quickly.

AR/VR!

There are probably a lot more, and you can probably think of some, but these are just proofs that other ideas exist.

However, I'm curious about approaching the problem from the other end. We want to create a "natural"-feeling API. So it seems productive to define what natural means. The ideas I'll describe are all in this older blog post, but here I arrive at them from a different direction.

So defining what natural means to us depends on how we interact with a computer. For most people, that's primarily mouse and keyboard (lets keep mobile and accessibility a separate discussion). We can think of a mouse pointer as a moving point in space and time, while clicks are occasional signals sent in time. Keyboard clicks are also signals sent in time, except there are a lot more options. It's also a lot faster to send the keyboard signals and multiple can be sent at a time.

This is why apps like Raycast and the "terminal-style" ideas are so popular! They replace slow clicks with fast typing. Maybe we should write what we want to do, all the time?

(Does this mean the keyboard is just better?)

No, just that it matches up better with this sort of action. With an API operation, you're asking the computer to perform some sort of action. We're most used to this through written or spoken word. But that's certainly not all: when API parameters include dimensions that are hard to "serialize" to text, that's where things like joysticks and mice can come in. Like dragging a Figma board. But those kinds of API endpoints tend to be rarer.

Vim is actually a great example here. A cursor position is the sort of spatial thing thats hard to serialize to text but easy to "point" to. Its more comfortable for me to click where I want my cursor to be, where in Vim, I'd have to specify the line number and character number (I think?)

But this can also get tough quickly. Imagine knowing the exact proper name for everything on your screen, or worse, knowing some sort of id!

How do we deal with this problem in real life? When we're talking to people, we're often saying things like "take that upstairs" or "move the piano downstairs". Words that take up contextual meaning. Context is important; it lets us do and communicate things through ambiguity: Leveraging ambiguity can be more productive than exact syntax. This is something traditional UI gets right and REST APIs do not. A "new" button will make sense in a UI from the context but a REST API needs you to be more specific (far more specific).

At this point, we've figured out that a good interaction framework with an API needs to be keyboard-first, and context-aware. I also think it should be a few other things:

Very fast to get started. Should be as easy as navigating to a web page.

Rich visualization and interactions. There should be sliders, color wheels, maps, all sorts of stuff on my page.

Easily automated. I should be able to (in one click of a button) set off a bunch of actions I do often sequentially.

Powerful. I need the full range of interaction with the underlying API.

Composable. Ideally, let me work with other services.

without all of these:

Very slow.

Limited by designers. It needs to be customizable to how I use it.

Need to write/understand code. Ugh!

Constrained to text.

If they sound familiar, thats because they're all the pros and cons respectively from the two lists above 😄. I don't think this is impossible! At the very least, the tradeoffs from a traditional UI and REST API do not need to be nearly so steep.

I think the things I've outlined here in terms of good design are overlooked: people seem to think UI design and REST API design are two different worlds and that's not true. They're the same world, split in two halves, unaware that there's a lot to learn from the other side! This follows my larger hunch that design and programming are pretty much the same thing, just in different contexts.

To end off, here are some ways of interaction that "feel" closer to the right way:

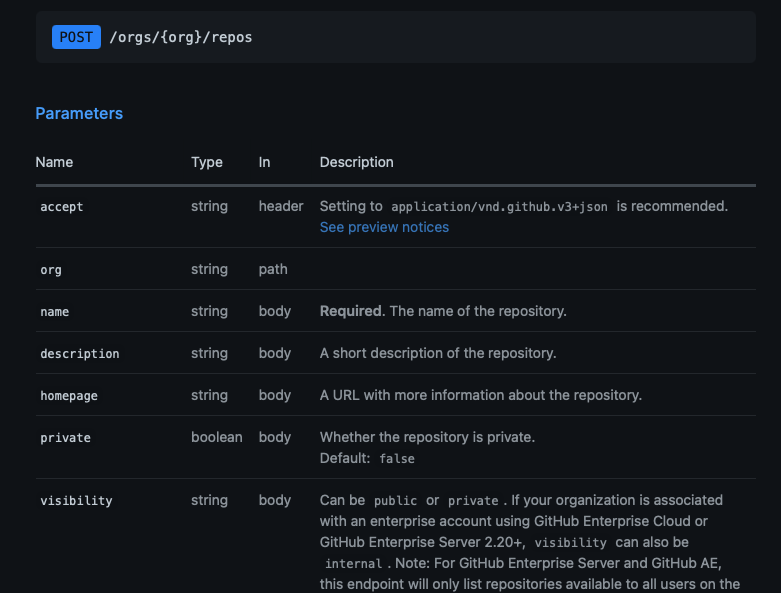

API consoles

You know how lots of API docs include these consoles where you can call the endpoints:

image:/posts/ui-as-an-api/spotify-console.png

This checks so many boxes! Easy to understand and get started with, but also very powerful! Look at this beautiful interaction where if I forget to supply an OAuth Token, it helpfully lets me create a quick demo token with proper scopes:

image:/posts/ui-as-an-api/scopes.png

Of course, though, the endpoints aren't composable, and often the results aren't super helpful either: just a JSON dump. They're just meant as examples, but man would I love it if an app made it a dedicated way to interact.

The terminal-style ideas from before but context-aware

Again, a link to my older blog post talking about combining the main keyboard-first interaction of the terminal ideas with the point and click of the mouse: Combination of spatial and text instructions. I.e. thinking clicking on a figma board and then once its selected, type "apply autolayout" or saying "put this in here" where you specify "this" and "here" by clicking on them.

Some of the terminal ideas are already context-aware but afaik they don't combine the spatial input with the mouse.

Idea of contexts in an API

Usually in a REST API, you just kinda specify the whole unambiguous action up front. What if you could enter "contexts" the same way you can navigate to different pages in a traditional UI? i.e. something like api.enterContext("currentUser").enterContext("repositories").createNew().run() where maybe the createNew action also exists in other contexts (such as projects or users). This maybe reduces the barrier to entry (but not by much if you still have to write code). However, it has the added benefit, that its possible to map UI interactions in an elegant way to the REST API.